At Arocha & Associates, we are working on an exciting project that brings together actuarial science, data science, and machine learning. The aim is to explore how different statistical models perform when predicting insurance claims—one of the most important tasks actuaries face in practice.

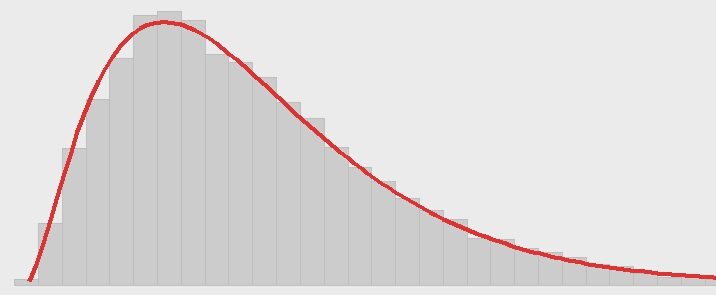

Traditionally, actuaries have relied on Generalized Linear Models (GLMs). These models are transparent, well understood, and embedded in regulation and professional practice. However, GLMs have limitations: they assume linear relationships and struggle to capture complex interactions between variables.

In recent years, Gradient Boosting Machines (GBMs) have emerged as powerful alternatives. GBMs are ensemble models that combine many simple decision trees to uncover subtle, nonlinear, and high-order interaction patterns in the data. In insurance applications, these patterns often matter—think of how vehicle age, horsepower, and driver experience might interact in complex ways to influence claim probability.

The Project

We designed a synthetic dataset that mimics an auto insurance portfolio: policyholders with different ages, driving histories, telematics, and vehicle characteristics. Importantly, we built in signals that are deliberately nonlinear and interaction-heavy—patterns that GLMs cannot easily capture but GBMs can.

The goal is to demonstrate, in a controlled environment, how:

- GLMs perform well on linear, additive effects but miss the nonlinear signals.

- GBMs adapt to complex structures and deliver sharper predictive power, measured by metrics like the Area Under the Curve (AUC).

- Understanding these differences helps actuaries decide when to use GLMs, GBMs, or a combination of both in pricing and risk management.

Why It Matters

For students, this project is a chance to see how classical actuarial models compare with modern machine learning. You’ll recognize that actuarial practice is evolving: regulators, insurers, and reinsurers are beginning to acknowledge the strengths of ML methods, while also debating transparency and fairness.

As future actuaries, you will be asked not only to apply models, but also to justify them: Why use a GLM here? Why a GBM there? What trade-offs exist between interpretability, accuracy, and fairness? This project is a small but concrete step into that important conversation.

What You Can Do

If you want to dive deeper, try replicating the experiment in Python or R. Start with a GLM, then switch to a GBM (e.g., LightGBM or XGBoost) and compare the performance. Ask yourself: how do the model assumptions influence the results? And what would regulators or clients want to see?

For a good introduction to GBMs (and Machine Learning in general), I recommend https://statquest.org/video-index/.

In the end, the project’s message is simple: actuarial science is not static—it’s expanding. By mastering both traditional and modern tools, you’ll be better prepared for the challenges and opportunities of tomorrow’s insurance world.